Re-Engineering the Cloud-Native Data Center for an Era of AI-Native Workloads

The rise of artificial intelligence is reshaping the data center landscape, along with the underlaying infrastructure. Merely being able to run distributed workloads is no longer enough.

The architectural transformation goes beyond incremental upgrades—it requires fundamentally rethinking data center design and operations.

The Rise of Accelerator-centric Architectures

Traditional data center architectures that emerged focused on CPU-driven compute are burning money when it comes to AI workloads, which need hardware acceleration with massive parallelism.

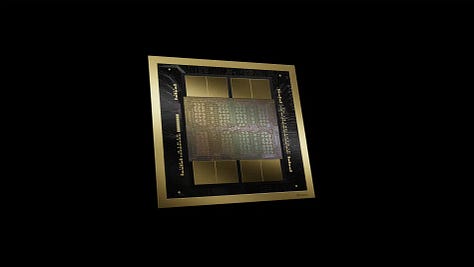

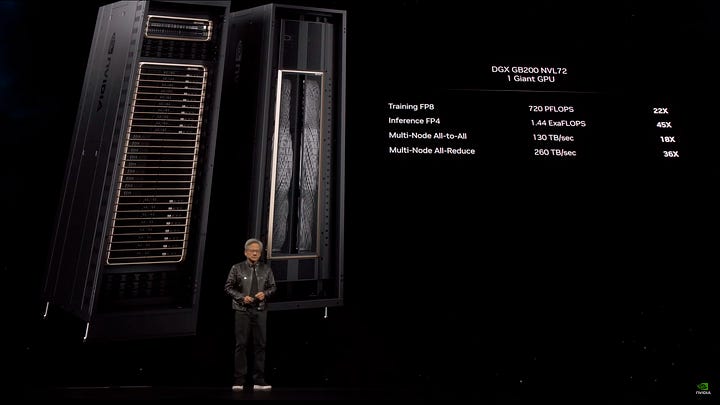

GPUs, TPUs, IPUs (from the likes of Graphcore) and other specialized accelerators have emerged as critical components of AI infrastructures that keep growing in sophistication.

CPUs (Central Processing Units) excel at general-purpose computing, but suck at AI.

GPUs (Graphic Processing Units) are designed for parallel processing; they ace AI training and graphics rendering but are having a hard time in terms of energy efficiency; two GPUs plus one CPU on a board make a Superchip in the lingo of NVIDIA.

TPUs (short for Tensor Processing Units), are Google invention; they are purpose-built for the matrix multiplications that happen inside of neural networks.

DPUs (Data Processing Units), such as NVIDIAs BlueField DPU or some AMD’s Xilinx innovations, specialize in data management in large AI systems. Other notable examples of these somewhat abstract processing units are made by Broadcom and Marvell Technology; Intel bakes its DPUs into its IPUs (Infrastructure Processing Units), specialized hardware accelerators designed to offload infrastructure-related tasks from CPUs in data centers and cloud environments, where they handle tasks like packet processing, traffic shaping, and virtual switching.

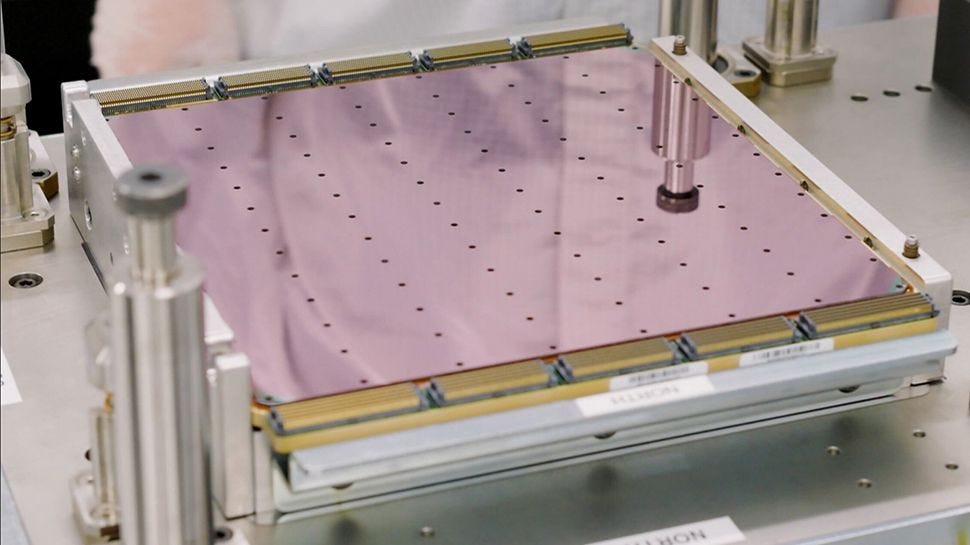

FPGAs (Field-Programmable Gate Arrays) excel at customization through their programmability, while ASICs (Application-Specific Integrated Circuits) are custom-designed for a specific task, which gives them an edge in terms of performance and energy efficiency over FPGAs, which, in turn, lend themselves to prototyping and small-volume production runs.

IPUs (Intelligence Processing Units) are optimized for graph processing and sparse data structures, making them particularly effective for analyzing interconnected data, such as in social networks or recommendation systems. One notable example is the Colossus IPU from Graphcore.

The emergence of specialized accelerators is driving data centers towards a higher power density. This, in turn, calls for liquid cooling, often necessitating specialized rack designs.

Given the exploding appetite for raw data, lower-latency, high-throughput networking becomes a prerequisite for data delivery.

Ever-growing power density calls for intelligent power distribution systems that are capable of handling unprecedented demand spikes from hardware accelerators that kick in concurrently while running massively parallel workloads.

Power-distribution units and UPS systems have to be re-engineered to match the characteristics of AI workloads at scale.

Hot and Liquid

AI chips consume substantially more energy and produce more heat than conventional processors. As a result, liquid cooling is on the rise in all shapes and forms conceivable: direct to plate cooling, direct-to-chip cooling, immersion cooling, hybrid cooling, you name it.

You can bid farewell to your hot isle containment!

Hot air is out, that’s for sure (and not quite the way it was originally intended…).

At this level of power density, heat reuse is becoming a compliance issue in many jurisdictions.

That spells more pipes!

High-Speed, Low-Latency Networking

AI training requires ultra-fast, high-bandwidth data transfer. Traditional networking comes short of the demands. AI workloads push data centers toward:

100Gbps to 400Gbps networking and beyond, using technologies like InfiniBand and high-speed Ethernet.

Software-defined networking (SDN) and specialized AI-friendly networking frameworks (e.g., RDMA and NVMe-oF) that minimize latency and accelerate data transfers.

Storage and Data Pipeline Optimization

AI workloads deal with massive datasets that need rapid access. This drives infrastructure changes toward:

High-performance, scalable storage (NVMe SSDs, object storage, specialized data caching).

Data pipeline optimization: the rise of streaming data infrastructures and storage architectures designed explicitly for AI training and inferencing workflows.

On Edge

AI isn't limited to the beefed-up core-DC. AI-driven applications—such as autonomous vehicles, IoT, or real-time video analytics—require distributed edge infrastructures. This trend includes:

Smaller, distributed data centers closer to end users.

Edge-optimized AI inference hardware to minimize latency.

Software-defined. Automated. Autonomous.

The complexity and scale of AI workloads accelerate adoption of automation technologies:

Increased deployment of Kubernetes, software-defined networking, and composable infrastructure.

Automated resource management that dynamically allocates compute, storage, and network resources based on real-time AI workload demands.

Modular and Scalable

To keep pace with AI growth, data centers are looking into adopting modular, containerized, and prefabricated infrastructures. Not only can they quickly scale in response to fluctuating demand, but just as importantly: they take comparatively little time to set up.

In other words: ramping up raw power has never been more important.